- The Summary AI

- Posts

- 🔥 OpenAI Sora Puts You in Videos

🔥 OpenAI Sora Puts You in Videos

PLUS: ChatGPT Gets Shopping Checkout

Welcome back!

With Sora 2, OpenAI is stepping directly into social media, letting users insert themselves into AI-generated videos complete with synchronized audio and realistic physics. It’s an experiment to see whether AI can go from tool to entertainment. Let’s unpack…

Today’s Summary:

🔥 OpenAI launches Sora 2 with “cameos”

💳 ChatGPT adds in-chat shopping

👗 Google releases AI shopping visual search

⚖️ California passes AI safety law

🗣️ Amazon unveils Echo lineup for Alexa+ AI

🔮 What will AI look like in 2030?

🛠️ 2 new tools

TOP STORY

OpenAI Sora 2 lets users place themselves in AI videos

The Summary: OpenAI has released Sora 2, its new video and audio generation model through a social app. The model produces short clips with synchronized audio and realistic physics, with early testers saying it’s on par with Google Veo 3. Its standout feature is “cameos”, letting users place themselves and their friends into the AI-generated scenes.

Key details:

Cameos use a one-time recording of the user’s likeness

Audio is built-in with synchronized dialogue and effects

The Sora feed prioritizes content from friends

Videos include visible watermarks and C2PA metadata

Meta launched a similar AI video feed feature called Vibes

Currently invite-only, rolling out first in the US and Canada

Why it matters: Sora is OpenAI’s attempt to move beyond tools into a social platform. Instead of serving as invisible infrastructure for creators, it turns AI into entertainment. The experiment is whether generative models can attract users long enough to form a self-sustaining network competing with TikTok and Instagram.

FROM OUR PARTNERS

What The Smartest Teams Do Differently

Turn customer feedback into evidence that moves your product roadmap faster

For PMs who need buy-in fast: Enterpret turns raw feedback into crisp, evidence-backed stories.

Explore any topic across Zendesk, reviews, NPS, and social; quantify how many users are affected and why; and package insights with verbatim quotes stakeholders remember.

Product teams at companies like Canva, Notion and Perplexity use Enterpret to manage spikes, stack-rank work, and track sentiment after launches—so you can show impact, not just ship lists.

Replace hunches with data that drives planning, sprint priorities, and incident triage.

OPENAI

OpenAI adds Shopping to ChatGPT with Stripe Payment

The Summary: OpenAI has added Instant Checkout to ChatGPT, starting with Etsy sellers in the US and soon expanding to all Shopify merchants. The feature runs on the new open-source Agentic Commerce Protocol, co-developed with Stripe, which allows secure in-chat purchases while merchants retain control of fulfillment and payments. Users can move from discovery to checkout without leaving the ChatGPT window.

Key details:

Available initially to US ChatGPT Free, Plus, and Pro users

Starting with Etsy sellers, Shopify merchants will be added next

ACP enables secure Stripe Payments across any provider

Multi-item carts and international expansion are on the roadmap

Why it matters: This feature positions ChatGPT as more than a recommendation engine, it directly becomes a new e-commerce channel. The Agentic Commerce Protocol could standardize how AI agents transact, giving merchants a way to plug in once and reach more platforms. For users, checkout flows move closer to intent expression, reducing the distance between “I want this” and “I bought this”.

Google AI Mode brings multimodal shopping

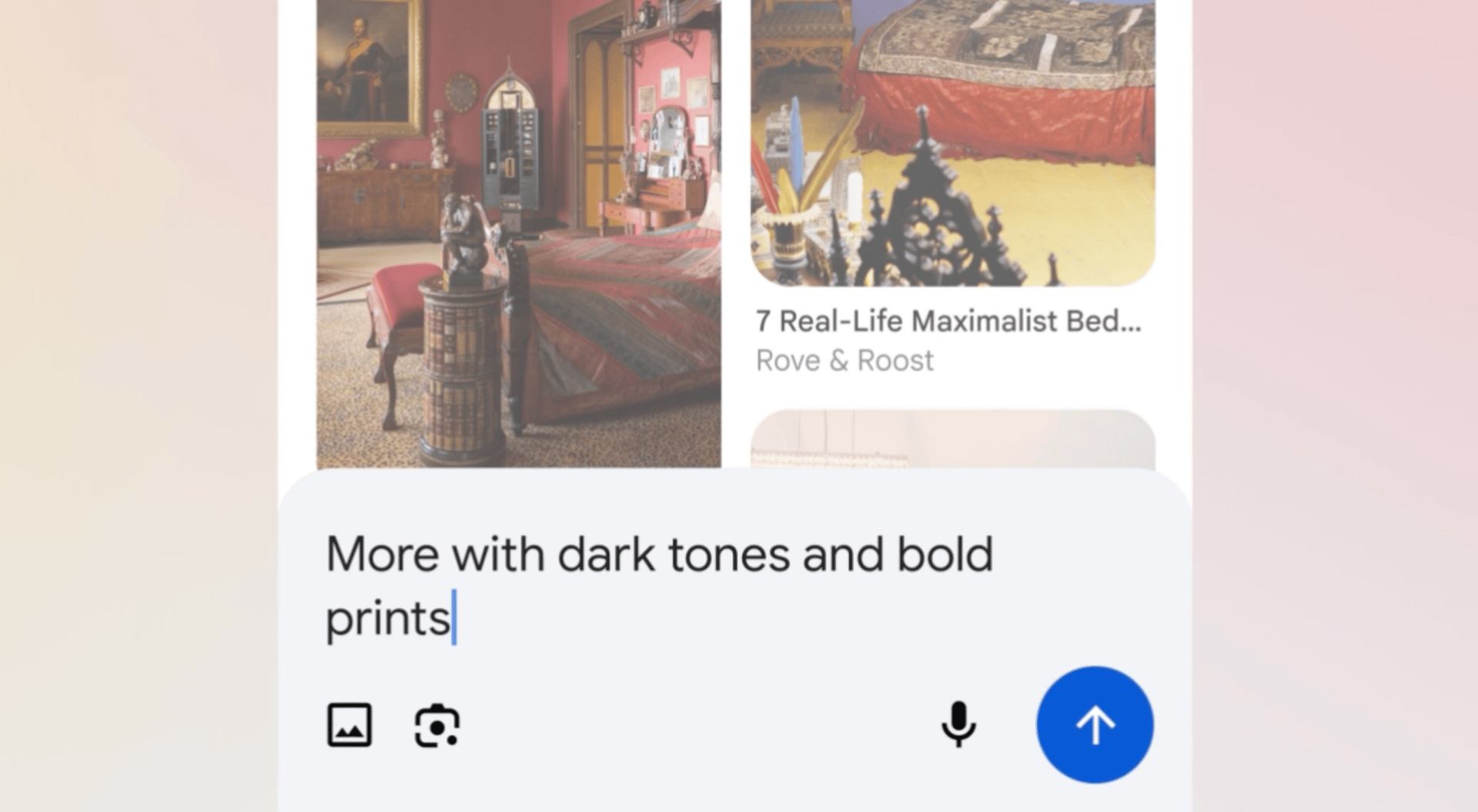

The Summary: Google has expanded AI Mode with new visual exploration tools powered by Gemini 2.5. Users can now search from a photo and refine visual results through follow-up prompts. A new “visual search fan-out” system runs multiple background queries to decode subtle details in product images. The update also strengthens shopping with Google’s 50-billion-item Shopping Graph.

Key details:

AI Mode now supports product image search refinements like “show more ankle length”

Visual search fan-out scans images for both main and secondary objects, launching parallel queries for richer results

Shopping Graph updates over 2 billion product listings every hour, surfacing the freshest results from global retailers

On mobile, users can search inside product images by asking contextual follow-up questions about what’s displayed

Why it matters: Google is making AI Search work better for vague or incomplete ideas. You can start with a photo, a rough description, or even a mood, and AI Mode will return visual results you can refine. It catches subtle details in the images, and you can then ask things like “show me more but in darker color” and get accurate new options.

QUICK NEWS

Quick news

That’s all for today!

If you liked the newsletter, share it with your friends and colleagues by sending them this link: https://thesummary.ai/