- The Summary AI

- Posts

- 🔥 GPT-5.3-Codex

🔥 GPT-5.3-Codex

Seedance 2.0 Beats Veo 3.1 in Video

Welcome back!

OpenAI released GPT-5.3-Codex, a new coding model that helped manage its own deployment and training. It tops coding benchmarks like SWE-Bench Pro and Terminal-Bench 2.0, runs 25% faster, and is classified as a “high-capability cybersecurity model”. Let’s unpack…

Today’s Summary:

🔥 OpenAI’s GPT-5.3-Codex leads coding benchmarks

🎥 Seedance 2.0 beats Veo 3.1 in video control

📚 Google updates Gemini 3 Deep Think

📣 Claude stays ad-free

🖼️ Qwen Image 2.0 nails text rendering

💻 MiniMax M2.5 delivers Opus-class coding at 1/20 the price

🛠️ 2 new tools

TOP STORY

OpenAI releases GPT-5.3-Codex coding model

The Summary: OpenAI released GPT-5.3-Codex, using the model itself to debug training runs, manage deployment, and evaluate results. It runs 25% faster, tops coding benchmarks, and can sustain multi-day autonomous projects. OpenAI classified it as its first "high-capability" cybersecurity model, trained to identify software vulnerabilities.

Key details:

Achieved 77.3% on Terminal-Bench 2.0, beating Claude Opus 4.6 by 12 percentage points, though testers find Opus still wins in real-world tasks

The smaller Codex-Spark fast variant outputs 1,000 tokens per second via the new Cerebras chips

Codex users favor speed for well-defined tasks, while Claude Opus users prefer its precision for complex reasoning

Available to paid ChatGPT users via the Codex app and CLI

Why it matters: When Codex debugs its own training infrastructure, it creates a flywheel where each model generation helps build the next. The cybersecurity classification matters because vulnerability discovery at scale flips the economics of software security: finding bugs could become cheaper than writing them.

FROM OUR PARTNERS

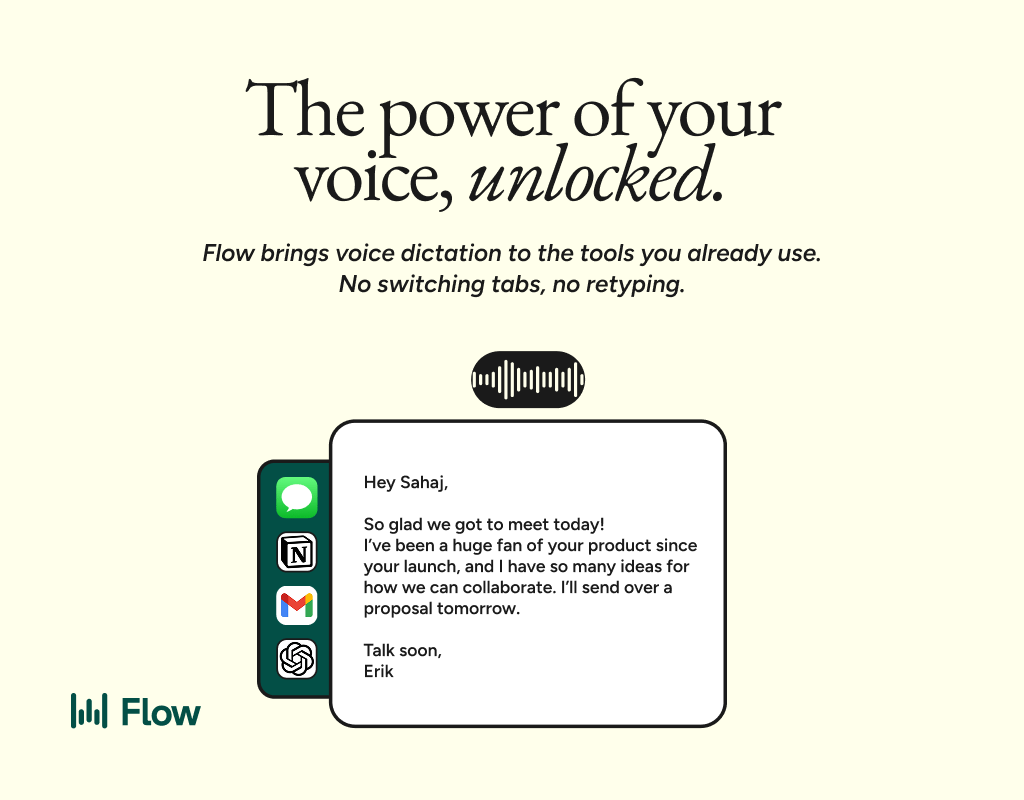

Better Prompts by Talking

Better prompts. Better AI output.

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

BYTEDANCE

Seedance 2.0 brings director-grade camera work to AI video

The Summary: Bytedance released Seedance 2.0, a multimodal AI video generator that processes images, videos, audio, and text simultaneously to create 15-second clips with sound. The model lets users upload example videos to extract camera movements or effects, then swap characters or extend scenes based on the template. The launch drove Chinese AI stocks up 20% and drew comparisons to DeepSeek’s “viral moment”.

Key details:

Handles up to 12 input files (images, videos, audio tracks) in a single generation with automatic sound effects and music

Reference feature extracts camera work and motion from uploaded videos and applies them to new content

15-second clips include narrative arcs with beginning, middle, and end rather than abrupt cutoffs seen in Veo and Sora

Pricing starts at $9.60/month with effective costs as low as $0.42 per shot due to high success rates, compared to high waste rates in earlier models

Why it matters: Beyond raw quality improvements, the new reference-based control is a genuine workflow advance. Creators can specify exact camera movements and effects through example videos rather than vague text descriptions. Audio generation remains the bottleneck for chaining clips into longer pieces, but the system could compress traditional storyboarding and animation pipelines into prompt-based workflows.

FROM OUR PARTNERS

100 Prompts to Work Smarter

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

Google upgrades Gemini 3 Deep Think for engineers and scientists

The Summary: Google DeepMind upgraded Gemini 3 Deep Think, targeting complex problems in science, research, and engineering where data is messy and solutions aren’t always clear-cut. Google AI Ultra subscribers get access through the Gemini app. The model achieved 84.6% on ARC-AGI-2 and earned gold-level performance at the Physics and Chemistry Olympiads.

Key details:

Scored 84.6% on ARC-AGI-2, outperforming Claude Opus 4.6 (68.8%) and GPT-5.2 (52.9%) by wide margins

Achieved Elo rating of 3,455 on Codeforces competitive programming, compared to Claude's 2,352

Can convert hand-drawn sketches into 3D-printable files by modeling complex shapes from drawings

Set new record of 48.4% on Humanity's Last Exam, designed to test the limits of frontier AI models

Why it matters: Researchers now have access to reasoning tools that can catch errors in peer review and handle domains with sparse training data. The performance gap on logical reasoning benchmarks is significant. The model's ability to work with messy, incomplete inputs addresses a core challenge in research environments where clean datasets are rare.

QUICK NEWS

Quick news

TOOLS

🥇 New tools

That’s all for today!

If you liked the newsletter, share it with your friends and colleagues by sending them this link: https://thesummary.ai/