- The Summary AI

- Posts

- 🔥 DeepSeek V3.2 Matches GPT-5

🔥 DeepSeek V3.2 Matches GPT-5

PLUS: Sutskever Says Scaling Era Is Over

Welcome back!

DeepSeek just dropped its new V3.2 models matching GPT-5 reasoning while cutting compute costs by more than 70%. The open-source models have now firmly reached top-tier intelligence. Let’s unpack…

Today’s Summary:

🔥 DeepSeek V3.2 challenges GPT-5

🖼️ Black Forest Labs launches FLUX.2

🧠 Ilya Sutskever says AI scaling era is over

🚨 OpenAI issues internal “code red”

📸 Z-Image model adopted by ex-Stable Diffusion users

🌀 Mistral Large 3 family released

🛠️ 2 new tools

TOP STORY

DeepSeek-V3.2 Matches GPT-5

The Summary: DeepSeek has released V3.2 and V3.2-Speciale, two open-source AI models rivaling GPT-5 and Gemini-3-Pro in reasoning and coding benchmarks. The models combine a sparse attention system that cuts compute costs by 70% with gold-level performance in math and programming Olympiads.

Key details:

DeepSeek Sparse Attention (DSA) reduces inference cost from $2.40 to $0.70 per million tokens

The 685B-parameter V3.2-Speciale scored gold in IMO, IOI, ICPC, and CMO 2025 competitions

Models are licensed under MIT and downloadable on Hugging Face for unrestricted commercial use

Community tests show V3.2 running on M3 Ultra Mac Studio 512GB RAM at ~20 tokens/sec

Why it matters: DeepSeek continues to rewire the global AI race by making GPT-5 level reasoning cheap enough to run in a home lab. Kimi K2 Thinking and DeepSeek now represent two strong poles of open intelligence, against the proprietary API monopoly that defined the past three years.

FROM OUR PARTNERS

Boost Productivity with Smarter AI Prompts

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

IMAGE AI

Black Forest Labs launches FLUX.2

The Summary: Black Forest Labs has released FLUX.2, a new generation of open-source image models that merge text-to-image and image editing into a single system. FLUX.2 supports up to ten reference images, generates 4 MP visuals, and delivers high quality typography and structured prompt handling.

Key details:

FLUX.2 [Dev] is a 32B open model with generation and editing

Supports 4MP editing, up to 10 reference images, and JSON-style prompts

VAE module under Apache 2.0 license shared by all variants

Arena image leaderboard places FLUX.2 just behind Google’s Nano Banana Pro and leading other open-weight rivals

Why it matters: Choosing FLUX.2 over models like Google Nano Banana comes down to control and cost. Google’s model locks users into a closed API while FLUX.2 can run locally or through multiple providers. It’s slower to set up but cheaper at scale.

FROM OUR PARTNERS

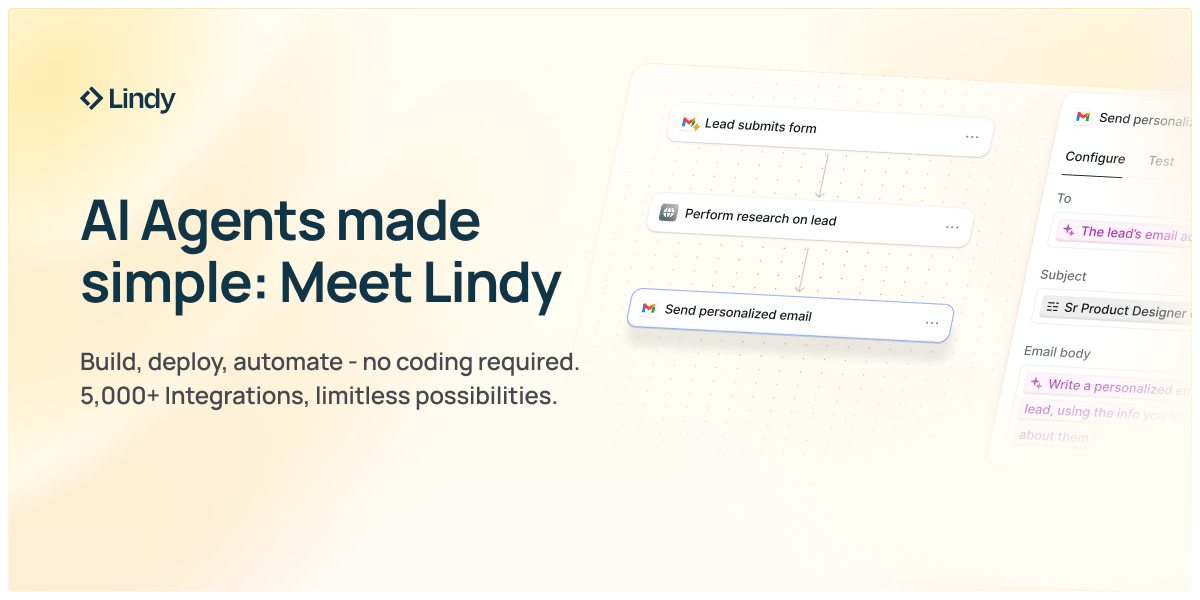

Build Your Own AI Agent in Minutes

Build smarter, not harder: meet Lindy

Tired of AI that just talks? Lindy actually executes.

Describe your task in plain English, and Lindy handles it—from building booking platforms to managing leads and sending team updates.

AI employees that work 24/7:

Sales automation

Customer support

Operations management

Focus on what matters. Let Lindy handle the rest.

OPENAI

Ilya Sutskever declares the end of AI scaling era

The Summary: Ilya Sutskever, cofounder of OpenAI and now head of Safe Superintelligence Inc., says the AI industry has reached the limits of “just add GPUs”. He argues that scaling alone can’t solve the core problem: AI’s failure to generalize like humans. Sutskever believes the next breakthroughs will come from new learning principles instead of creating bigger and bigger clusters.

Key details:

Sutskever calls 2020–2025 the “age of scaling”, driven by increasing compute and data; now enters an “age of research”

He says today’s models show “jaggedness”: stellar results on benchmarks, but brittle in the real world

Aims to build learning systems instead of pre-trained “know-it-alls”

Forecasts superhuman-level learning may happen within 5–20 years and proposes aligning AI with “care for sentient life”

Why it matters: If Sutskever is right, AI progress will change from brute force scaling of GPU farms to a new phase of research. The competitive edge for the next decade will come from understanding how machines learn and why models fail.

QUICK NEWS

Quick news

OpenAI issues internal “code red” as Google gains ground

Z-Image model emerges as the new favorite for ex-Stable Diffusion users

Mistral releases Large 3 open model family

TOOLS

🥇 New tools

That’s all for today!

If you liked the newsletter, share it with your friends and colleagues by sending them this link: https://thesummary.ai/